DNA Day Makes Us Feel Like Rock Stars

This year’s DNA Day arrives at a heady time for advances with the world’s most important molecule: scientists have edited DNA in a human zygote for the first time, we’re closer to a fully finished human reference genome than ever before, and the community is making major strides in using DNA to store data.

It’s humbling to be part of a field where transformations are happening so quickly and with such frequency. What’s being accomplished today is truly amazing, especially when we consider that June 2000 saw the White House announcement of the first drafts of the human genome sequence from the Human Genome Project and Celera. Fifteen years ago, telling our friends and family about working in the genomics field was the ultimate conversation-stopper; today, we feel like rock stars when people learn that we’re part of this exciting industry.

DNA Day celebrates both the completion of the draft of the first human genome, published in April 2003, and the seminal paper on the structure of DNA from Watson, Crick, and collaborators in 1953. When we think about how much has been learned about DNA since those first studies, it’s staggering: from epigenetics to CRISPR, from transposable elements to folding properties, we have come so far in such a short period of time. Now biology is entering the realm of big data, and DNA sequencing has led the way.

Of course, there’s still a long way to go. We believe that public education is particularly important; in a recent survey of consumers, the vast majority of respondents said that “any food containing DNA” should be labeled as such. It’s sad that even as we’re making incredible leaps forward in our understanding of DNA, so many people still have little or no education about this molecule and its function in the world. We hope that the community finds new and innovative ways to inform the public as it continues this unprecedented pace of biological discovery.

We wish you and yours a happy DNA Day!

Video Protocol: NGS-based Methylation Mapping with Pippin Prep

We always love a great protocol video, and this one from scientists at Weill Cornell Medical College, published through the Journal of Visualized Experiments, is a keeper. Check it out here: “Enhanced Reduced Representation Bisulfite Sequencing for Assessment of DNA Methylation at Base Pair Resolution.”

We always love a great protocol video, and this one from scientists at Weill Cornell Medical College, published through the Journal of Visualized Experiments, is a keeper. Check it out here: “Enhanced Reduced Representation Bisulfite Sequencing for Assessment of DNA Methylation at Base Pair Resolution.”

The protocol, which can also be viewed the old-fashioned way here, is an NGS-based approach to map DNA methylation patterns across the genome and was developed as an alternative to microarrays. The Cornell scientists and their collaborator at the University of Michigan present a step-by-step recipe for using a restriction enzyme in combination with bisulfite conversion to achieve base-pair resolution of methylation data. The entire method spans four days.

“Reduced representation of whole genome bisulfite sequencing was developed to detect quantitative base pair resolution cytosine methylation patterns at GC-rich genomic loci,” the scientists report. The data generated “can be easily integrated with a variety of genome-wide platforms.”

In the protocol, the scientists call for automated DNA size selection with Pippin Prep, assuming there’s enough input material to make it possible (25 ng or more). You can watch the process (just past the 4 minute mark in the video) or read about it in section 5.1 of the paper.

PippinHT in the Wild: High-Throughput ChIP-Seq at Whitehead Core Lab

At the Whitehead Institute’s Genome Technology Core, scientists handle a lot of ChIP-seq and RNA-seq projects. To boost capacity in library prep, they recently upgraded from a small fleet of Pippin Prep instruments to the new PippinHT for high-throughput, automated DNA size selection.

Technical assistant Amanda Chilaka uses the PippinHT very often — primarily for ChIP-seq library prep — and says that in just three or four months it has become the preferred size selection instrument in the core lab. Compared to the Pippin Prep, Chilaka says, the speed and capacity of the PippinHT are a big improvement. “The PippinHT size selection is a little bit more precise and it’s faster as well,” she adds.

One benefit Chilaka finds particularly useful is the ability to cut different libraries at different size ranges in a single run. She also notes that sizing from one sample to another is very consistent.

“I can’t imagine us ever going back to cutting gels with a scalpel at this point,” Chilaka says. “I definitely recommend the PippinHT for anyone doing library prep or working at a high-throughput lab. It’s incredibly helpful.”

TGAC Bioinformaticians Benefit from SageELF

Darren Heavens has witnessed a fascinating transition at The Genome Analysis Centre as the Norwich, UK-based institute shifted from data-generation mode to data-analysis mode. When the center launched more than five years ago, there was a fairly even split between laboratory-based scientists and bioinformaticians, Heavens says; today, there are about 15 laboratory scientists and nearly 70 bioinformaticians. The focus is on generating great data that lets the bioinformatics experts perform the highest-quality analyses.

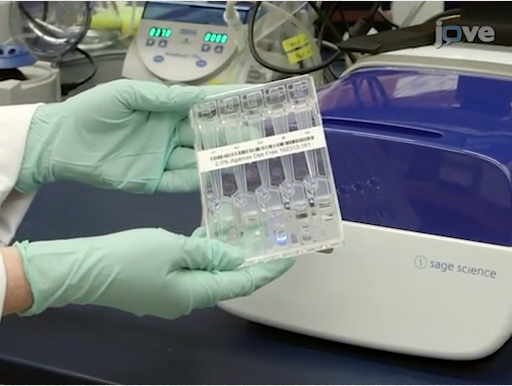

Heavens, a team leader in the Platforms and Pipelines group, spends a lot of his time figuring out how to make the data produced at TGAC more amenable to bioinformatics crunching. One of the newest weapons in his arsenal is the SageELF, an automated system that produces 12 contiguous fractions from a DNA sample.

His prior experience with Sage Science instruments came from the BluePippin, which he began using for size selection of NGS libraries after a TGAC bioinformatician presented data on the variability of insert sizes in libraries he was trying to assemble. “He did the data analysis and found that BluePippin sizing improved his outputs no end,” Heavens recalls.

So it was a no-brainer for Heavens to try out the new SageELF, which he’s been using for a few months now. “It’s great because it gives us the chance to make multiple libraries from one sample,” he says, noting that this helps keep reagent and other costs in check. For experiments requiring a very specific insert size, Heavens likes to run a sample on the SageELF and map the fractions to assembly data to determine which best meets the criteria before going ahead with the rest of the experiment.

His team uses the instrument for long mate-pair NGS projects, restriction-digest sequencing, and sequencing projects focused on copy number variation. For CNVs, Heavens and his colleagues came up with a protocol using SageELF to separate PCR products; they then sequence the largest fraction to get an accurate view of the highest copy numbers present in the sample. “That gives us the true copy number,” he says. “The duplicated genes themselves are so similar that if you don’t have the full-length fragment, they just collapse down in the assembly.” The protocol, which they developed for a project for one client, was so successful that several other clients have now come to TGAC asking for the same method for their samples, Heavens says.

The biggest advantage of SageELF compared to other fractionation methods is its recovery, according to Heavens. His team gets 40% to 45% recovery from input material with the platform, while “with a manual approach you’d be lucky to get 10% to 15% recovery,” he says. “For us that’s a big plus.” He notes that scientists working with precious samples might find SageELF particularly useful for making the most of input DNA.

Heavens says setup and training were simple and straightforward, and that his team is now running the SageELF at or near capacity, which equates to two runs per day of two cassettes each. Since each cassette yields 12 fractions, that’s 48 fractions each day that the TGAC team could potentially use for sequencing. “It has opened up so many avenues for us,” Heavens says.

New Structural Variant Analysis Method Uses Targeted Capture and Sequencing of Large-Insert DNA

In a new BMC Genomics paper, scientists from Baylor College of Medicine describe a new method for accurate, affordable interrogation of structural variants across the human genome. We’re delighted to see that automated DNA size selection tools from Sage Science contributed to this important approach.

In the paper, lead authors Min Wang and Christine Beck, along with collaborators from Baylor’s genome center, cite the need for a method like this based on the difficulties of using next-gen sequencing for structural variant analysis. Short-read technologies generally produce sequence data that doesn’t span the variants, making it impossible to align and assemble them accurately. Long-read technology has shown great promise, but has been too expensive for large-scale, genome-wide analyses, the authors note.

So they developed a target-capture approach to enrich for structural variants at particular chromosomal locations. With oligo capture, they target specific insert sizes using the Pippin Prep for fragments up to 1 Kb and BluePippin for anything larger. After library prep is completed, the selected DNA is sequenced on a PacBio instrument. The process is known as the PacBio-LITS (large-insert targeted capture-sequencing) method and is especially noteworthy because it’s the first report of targeted sequencing for libraries with insert sizes greater than 1 Kb.

In this method, size selection is an essential step to the success of the pipeline. “Manual gel-extraction methods involving agarose gel electrophoresis can be used, but we have chosen Sage Science’s Pippin and BluePippin platforms to perform target size selection for improved accuracy and sample recovery,” the authors write, adding that they use “range mode” to preserve DNA complexity from the sample.

The Baylor team presents data from a study of three samples from patients with Potocki–Lupski syndrome. Scientists used PacBio-LITS to analyze structural rearrangements associated with the disease, looking particularly at breakpoint junctions of low-copy repeats (LCR). “We successfully identified previously determined breakpoint junctions … and also were able to discover novel junctions in repetitive sequences, including LCR-mediated breakpoints,” the authors write.

The team posits that beyond structural variation, this new method could also be useful for validating indels and phasing haplotypes.

Check out the full paper: “PacBio-LITS: a large-insert targeted sequencing method for characterization of human disease-associated chromosomal structural variations.”